Esam Ghaleb

In brief: I develop computational models of multimodal language and behaviours: how speech, text, gesture, sign, facial expressions, and whole-body movement jointly encode meaning in interaction. Previously, I worked at the University of Amsterdam and Maastricht University on linguistic–gestural alignment and explainable multimodal modelling of human behaviour.

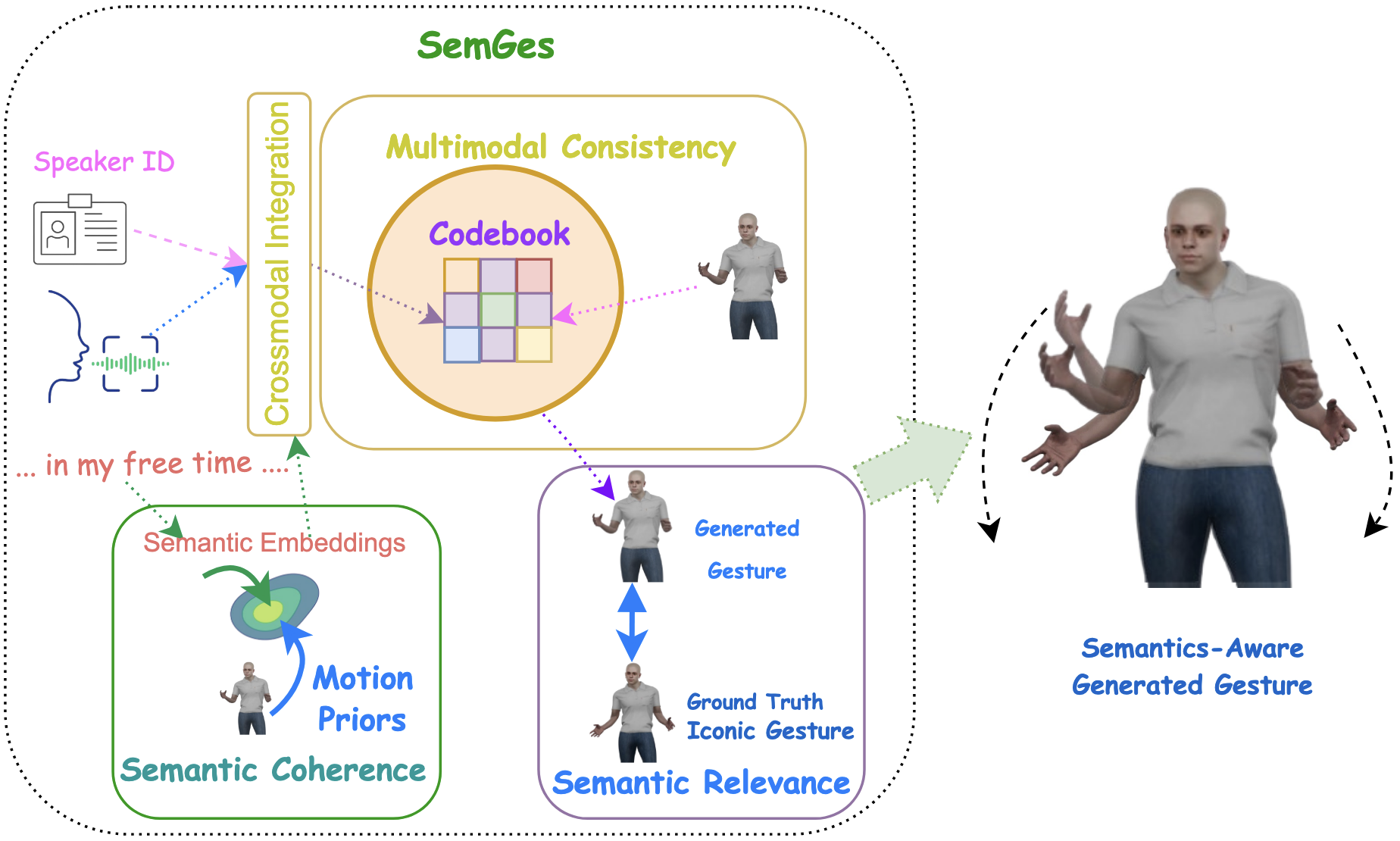

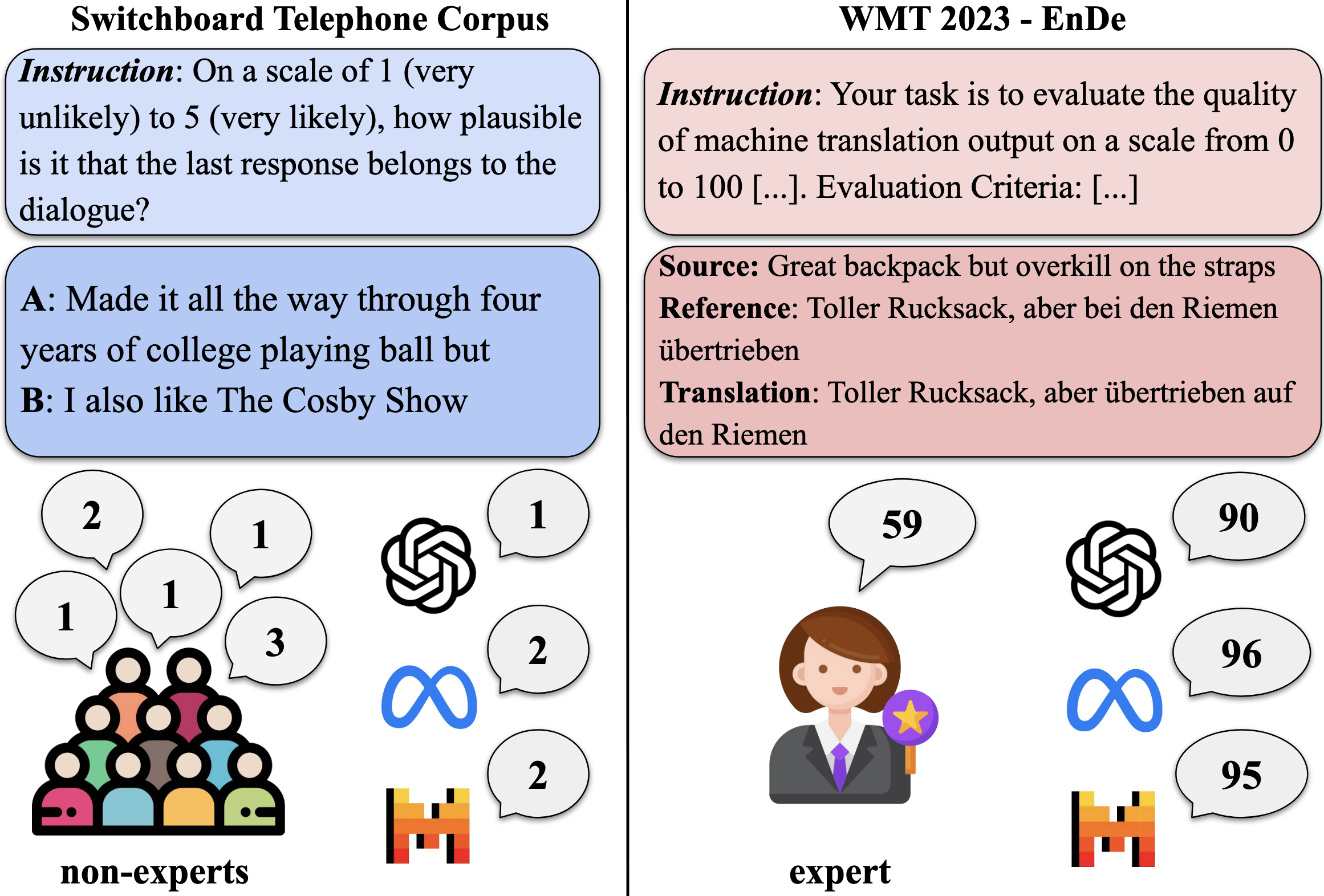

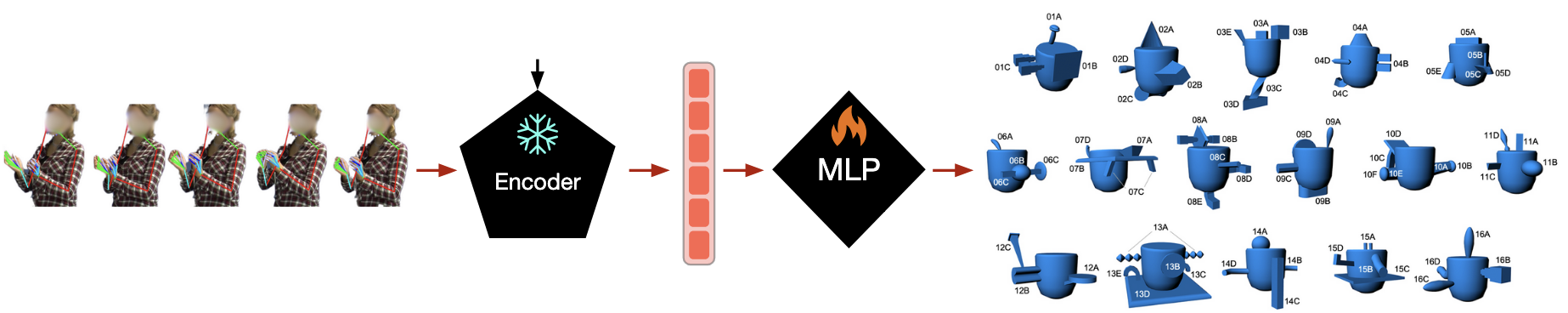

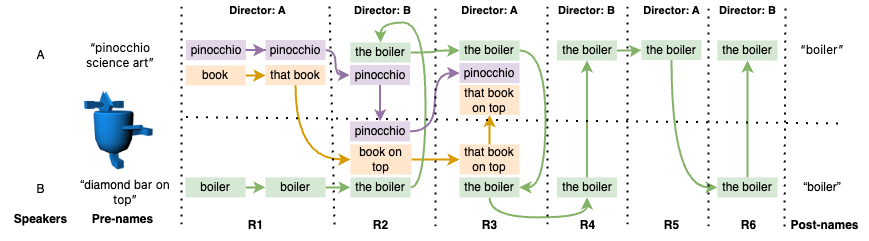

I am research staff in the Multimodal Language Department at the Max Planck Institute for Psycholinguistics (Nijmegen), and I lead the department’s Multimodal Modelling Cluster. My work builds and studies machine-learning methods for the segmentation, coding, and representation of visual communicative signals from motion-capture and video data, and uses learned representations as testbeds for theories of multimodal language across languages and interactional settings. I also study how large language and multimodal language models integrate and generate multimodal behaviour, and develop generative models of gesture for virtual agents. Recent work includes an NWO XS-funded project on grounded, object- and interaction-aware gesture generation in context.

News

| Dec 20, 2025 | Donders Stimulation Fund Award |

|---|---|

| Dec 2, 2025 | Guest Lectures 2025 @ Radboud University |

| Oct 9, 2025 | Preprint on the Visual Iconicity Challenge |

| Oct 7, 2025 | PhD Opportunities Available at Max Planck Institute for Psycholinguistics via MP-AIX |

| Sep 25, 2025 | Invited Talk 2025 @ Utrecht University |