Bodily Expressed Emotion Recognition

Skeleton-based explainable bodily expressed emotion recognition through graph convolutional networks

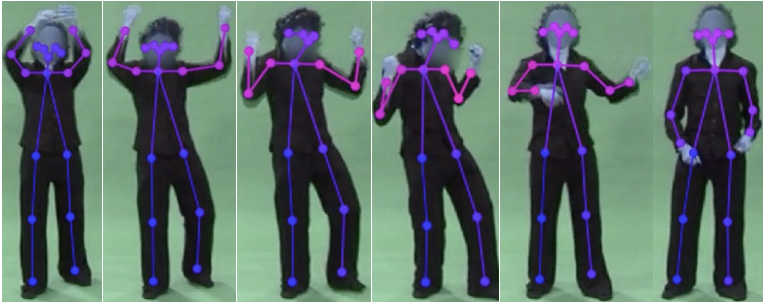

This is a pioneering study in the field of emotion recognition since it shifts the focus of explainable AI from voice and face as expressive modalities to bodily expressions. In this study, I proposed a procedure using state-of-the-art machine learning methods that offer accurate performance and explainable decisions. Specifically, I developed an explainable framework for bodily expressed emotion recognition using Graph Convolutional Networks (GCNs), that offer accurate performance and explainable decisions. Our findings show. In comprehensive evaluations, the study’s findings show that hands and arm movements are the most significant for automatic bodily expressed emotion recognition. These findings are in alignment with perceptual studies, which have shown that features related to the arms’ movements are correlated the most with human perception of emotions.

Besides, I supervised a Master’s Thesis on the same topic, albeit using a different approach. The student used CNNs to recognize emotions from body movements, focusing on the model’s interpretability.

Having transparent and explainable methods increases scientific discovery to have consistency with domain knowledge. It is an important research direction as it can also help reduce biases and discrimination. More information can be found in the following publications.

- E. Ghaleb, A. Mertens, S. Asteriadis and G. Weiss,”Skeleton-Based Explainable Bodily Expressed Emotion Recognition Through Graph Convolutional Networks“2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG2021), Jodhpur, India, 2021, pp. 1-8, doi: 10.1109/FG52635.2021.9667052.

- A. Mertens, E. Ghaleb, and S. Asteriadis. “Explainable and Interpretable Features of Emotion in Human Body Expressions.” in BNAIC/BeneLearn 2021, 2021.

This video shows a demo from my work titled “Skeleton-Based Explainable Bodily Expressed Emotion Recognition Through Graph Convolutional Networks,” which was presented at the 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG2021) in Jodhpur, India, 2021: