Multimodal Face-to-face Dialogue Modeling

Understanding the emergence and maintenance of cross-modal alignment in face-to-face dialogues

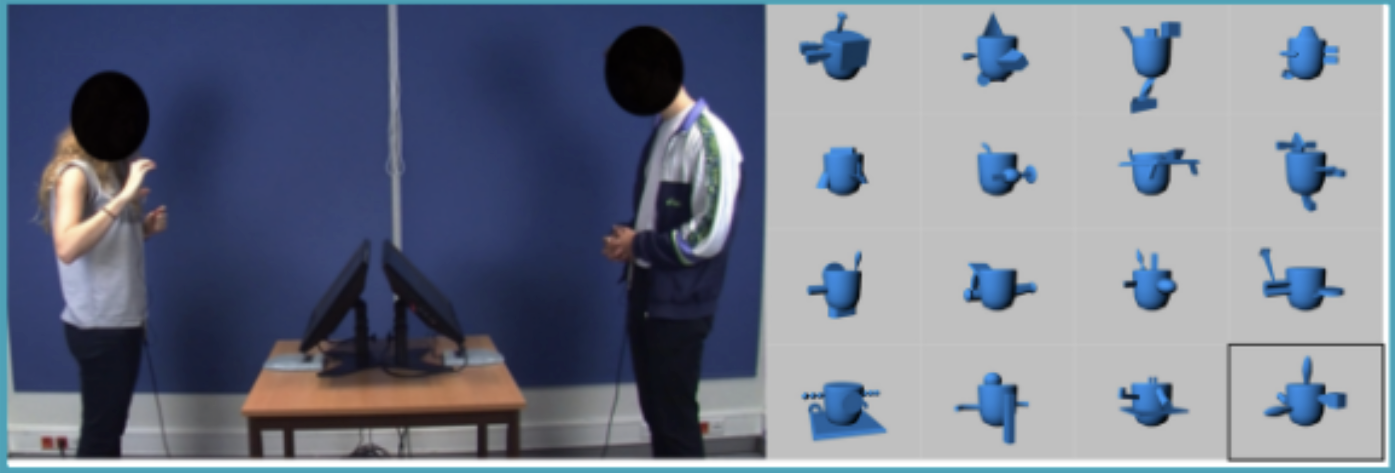

This project explores how interlocutors in dialogue develop a shared understanding of novel referents through cross-modal alignment, using both speech and gestures. To achieve this, we employ computational approaches from the fields of computer vision and natural language processing (NLP) to analyze a large-scale multimodal corpus of face-to-face task-oriented dialogues known as the CABB dataset developed within BQ3. In the CABB dataset, speakers participate in a referential game, where one participant (the director) describes a novel object called Fribble while the other participant (the matcher) tries to find it, using any means of communication available, including speech and gestures. This project has two main research goals, first, identify when pairs of interlocutors align on novel referential targets using speech and gestures, and second, identify regularities in multimodal behaviors’ history which leads to reference resolution and mutual understanding. Overall, this project contributes to understanding how humans use multiple modalities to establish mutual understanding and how AI methods can help analyze large-scale multimodal data without relying on rater-based analyses, which can be laborious and subjective.

The following research outputs are related to my work on dialogue coordination:

- Ghaleb, E., Burenko, I., Rasenberg, M., Pouw, W., Uhrig, P., Holler, J., Toni, I., Ozyurek, A. & Fernández, R. (2024). Co-Speech Gesture Detection through Multi-phase Sequence Labeling. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024. To appear

- Ghaleb, E. & Fernández, R. (2023, October 19–20). Linguistic alignment in referential communication: Automatic detection and analysis of its impact on shared conceptualization [Poster presentation]. (Mis)alignment in alignment research: A multidisciplinary workshop on alignment in interaction, Laboratoire Parole et Langage, Aix-en-Provence, France.